In the dynamic landscape of modern computing, containerization has revolutionized the way we develop, deploy, and manage applications. Over the past four years, my journey with Docker has been nothing short of transformative, especially in the realm of home labs and hosting various services. Its lightweight nature and versatility have significantly contributed to streamlining my processes, enhancing efficiency, and simplifying the management of my projects.

The Beginning of the Docker Odyssey

When I first encountered Docker, I was captivated by its promise of encapsulating applications within lightweight, portable containers. The idea of isolating services, enabling easy deployment across different environments, and eliminating the dreaded “it works on my machine” dilemma was truly groundbreaking.

Simplifying Home Labs with Docker

One of the most significant advantages Docker brought to my home lab was its ability to run multiple services concurrently without the complexities of traditional virtual machines. With Docker, I could set up distinct containers for various services—be it web servers, databases, or applications—each operating independently yet harmoniously on the same hardware.

This simplified orchestration allowed me to experiment freely, test different configurations, and easily roll back changes if something didn’t work as expected. Additionally, the resource efficiency of Docker meant that I could run numerous services simultaneously without compromising performance or wasting system resources.

Hosting Services Made Effortless

Docker’s role in hosting services cannot be overstated. Whether it’s hosting a personal website, a file-sharing service, or a media server, Docker’s agility and consistency in deployment have been invaluable. Pulling pre-configured images from Docker Hub or creating custom images tailored to my specific needs became second nature.

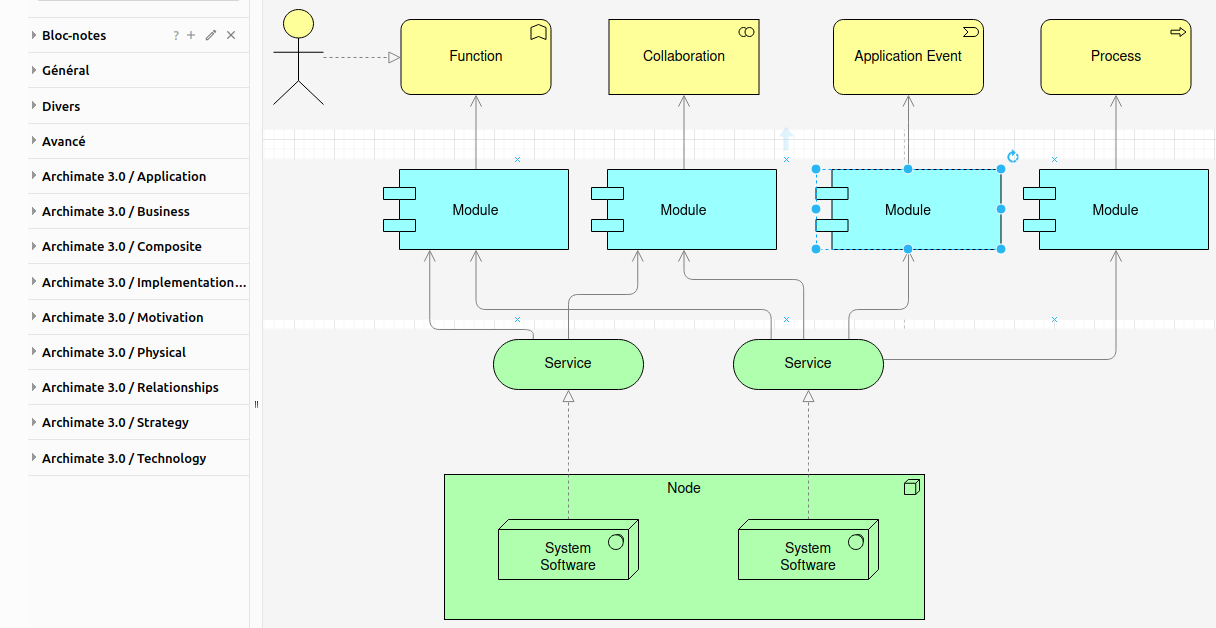

Moreover, Docker’s networking capabilities facilitated seamless communication between containers, enabling services to interact without complex networking configurations. This ease of networking simplified the setup of complex architectures and allowed me to focus more on functionality and less on infrastructure concerns.

Lightweight Yet Powerful

One of Docker’s most alluring features is its lightweight nature. Unlike traditional virtualization, which requires running separate operating systems for each virtual machine, Docker containers share the host system’s kernel, consuming fewer resources while maintaining isolation.

This lightweight footprint not only optimized resource utilization but also enabled me to deploy Docker containers on modest hardware, making it an ideal solution for resource-constrained environments such as home labs or low-powered devices.

The Future of Docker and Home Labs

As I reflect on my four-year journey with Docker, it’s evident that its impact on home labs and service hosting has been profound. The ecosystem continues to evolve, with advancements in orchestration tools like Kubernetes and Docker Swarm further enhancing Docker’s capabilities in managing containerized applications at scale.

Looking ahead, I foresee Docker continuing to play a pivotal role in home labs, empowering enthusiasts and professionals alike to experiment, innovate, and build robust infrastructures without the complexities of traditional setups.

Final Thoughts

In conclusion, Docker has been a game-changer in my home lab endeavors, simplifying service hosting, enhancing flexibility, and optimizing resource utilization. Its lightweight, portable nature coupled with seamless containerization has fundamentally transformed the way I approach the development, deployment, and management of services.

As I embark on the next phase of my journey, I’m excited about the continued advancements in containerization technology and the endless possibilities it offers for home lab enthusiasts and developers worldwide. Docker has undoubtedly left an indelible mark on my journey, and I eagerly anticipate the innovations that lie ahead in this ever-evolving landscape.

Post Comment